On 27 November Proun+ launched on iOS! The first reviews are extremely positive, both from the press and from users. We also got a big feature in the European App Store, so it seems like Apple itself also likes the game. :) Here are some quotes from the reviews and the launch trailer:

"A bright, searingly good twitch racer that takes the fundaments of the genre and builds something staggeringly entertaining on top of them."

9/10

PocketGamer.co.uk

"Previously a successful indie release on the PC, it deserves your attention and patience. [...] Throw in a funky jazz based soundtrack and Proun+ has a lot going for it."

4/5 stars

148Apps.com

"this self-styled “journey through modern art” exudes an endearing weirdness that sets it apart and nestles it in your brain. Like that off-beat game you used to play, that you’re convinced only you can remember, which you can’t possibly forget."

4.5/5 stars

Gamezebo.com

Proun+ is available in the App Store for iPhone and iPad for $3.99 here.

Sunday, 30 November 2014

Saturday, 22 November 2014

Why good matchmaking requires enormous player counts

Good matchmaking is an important part of creating an online multiplayer game. One thing you may not realise is that no matter how you build it, truly good matchmaking requires enormous numbers of players. Awesomenauts often has well over 1,000 people playing the game at the same time, which is very high and successful for an indie game. It certainly sounds like a lot to me, but this is only a fraction of what would be needed to do everything with matchmaking that we would want to do. Today I am going to explain why tens of thousands of concurrent players are needed for truly awesome matchmaking.

Matchmaking has two main goals. The first is to let people play together who will have a good internet connection to each other. We would like to avoid Australians playing together with Europeans because their ping will be very high. High ping decreases the quality of the game experience, especially in a fast and highly competitive game like Awesomenauts. Finding good connections is more complex than simply looking at distance: sometimes you have a worse connection to your neighbour than to someone on the other side of the continent. The internet is just very unpredictable and random when it comes to connection quality.

The second main goal of matchmaking is to let people play together who have similar skill. There is no fun in getting beaten by someone who is way better than you, and n00bstomping gets old really quickly as well.

Now that we know our core goals, let's try to estimate how much 1,000 concurrent players really is. I am going to simplify things and use a lot of assumptions, but I think the ballpark figures are realistic enough to communicate my point.

The first step is to look at how often these players are actually available for matchmaking. An Awesomenauts match takes on average somewhere around 20 minutes, so players are available for matchmaking once every twenty minutes:

We don't want to matchmake with people who are too far away, and players are spread all over the globe. Let's say that the average player would have a good enough connection to one third of all players. In reality players are not spread equally, since Awesomenauts is more popular in some countries than in others, and because of time zones. Let's work with that one third though:

Next step is skill. Let's say we consider one third of all players to be close enough in skill to make for a fun match:

AUCH! We are already down from 1,000 concurrent players to only 5.6 suitable players per minute, and this is while looking at only the most basic of assumptions...

For perfect matchmaking I would want to split players further. A common request from Awesomenauts players is to have unranked and ranked multiplayer. If we would add this, the split between these modes would probably not be equal: one mode would likely get more players than the other. Let's assume one third of all players would play one mode, and two thirds would play the other mode. We need to split further from the already small numbers we have because even in unranked matchmaking we still want to match players based on similar skill to create fun matches. This is how few players we would have left in the smallest of the two modes:

Another common request from the Awesomenauts community is to split pre-mades from solo-queuers. A pre-made is a group of people who form a team by hand in the lobby before the match, while solo-queuers are put together in a team with complete strangers by the matchmaker. Pre-mades potentially have a big advantage because they will likely do much better teamwork. In the ideal case pre-mades would therefore only play against other pre-mades. How many pre-mades there are varies wildly with the skill level of the players (highly skilled players generally play in pre-mades much more). Let's assume that on average one fourth of all players are in a pre-made:

Since we are talking about perfect matchmaking, let's have another look at our skill-based matchmaking. Above I assumed that one third of all players is a good enough match in skill. In reality the top players are way too much better than the rest to make this ideal. The top 5% of players are an enormous amount better than the top 33% of players. The more precisely we could match based on skill, the better. I think we would need to do at least three times better than we did above for ideal matchmaking:

I can imagine some more criteria for ideal matchmaking (like supporting more game modes in matchmaking), but I think the point is quite clear already. With 1,000 concurrent players it will take 30 minutes to fill a match! Obviously this is totally unacceptable. Here's a summary of all the criteria I have mentioned so far:

Let's say it is fine to let players wait for two minutes for a match to fill up, bringing us to 0.3 suitable players during the available time for matchmaking. To bring us to the required 6 we would therefore need 6 / 0.3 = 19 times as many concurrent players for good matchmaking. We started with 1,000, so we need 19,000 concurrent players. Of course there is a big daily fluctuation in the number of players (there are fewer players deep in the night and early in the morning), so to also have good matchmaking at the slow hours we would need three times more players still. Thus we would need 58,000 concurrent players at peak for good matchmaking. That probably equals over 5 million unique players each month. Holy cow that is a lot!

When building a multiplayer game it is important to think about this. Ideal matchmaking requires enormous player counts, and if your matchmaking is built assuming such player counts will be there you might make something that works really badly for more realistic numbers. Therefore the ideal matchmaking system is flexible: it brings perfect matchmaking when there are tons of players but also makes the best of a small player count.

Despite all of this we can still make big improvements to the matchmaking system in Awesomenauts. We are aware of this and are therefore rebuilding the entire matchmaking system from the ground up in a much smarter and much more flexible way than is currently in the game. I am sure this will bring a big improvement, but at the same time it is important to have realistic expectations: no matter how well we build our matchmaking, the player count required for 'perfect' matchmaking is unrealistic for all but the few most successful games in the world.

Matchmaking has two main goals. The first is to let people play together who will have a good internet connection to each other. We would like to avoid Australians playing together with Europeans because their ping will be very high. High ping decreases the quality of the game experience, especially in a fast and highly competitive game like Awesomenauts. Finding good connections is more complex than simply looking at distance: sometimes you have a worse connection to your neighbour than to someone on the other side of the continent. The internet is just very unpredictable and random when it comes to connection quality.

The second main goal of matchmaking is to let people play together who have similar skill. There is no fun in getting beaten by someone who is way better than you, and n00bstomping gets old really quickly as well.

Now that we know our core goals, let's try to estimate how much 1,000 concurrent players really is. I am going to simplify things and use a lot of assumptions, but I think the ballpark figures are realistic enough to communicate my point.

The first step is to look at how often these players are actually available for matchmaking. An Awesomenauts match takes on average somewhere around 20 minutes, so players are available for matchmaking once every twenty minutes:

| 1,000 / 20 = 50 players per minute |

We don't want to matchmake with people who are too far away, and players are spread all over the globe. Let's say that the average player would have a good enough connection to one third of all players. In reality players are not spread equally, since Awesomenauts is more popular in some countries than in others, and because of time zones. Let's work with that one third though:

| 50 / 3 = 16.7 players per minute |

Next step is skill. Let's say we consider one third of all players to be close enough in skill to make for a fun match:

| 16.7 / 3 = 5.6 players per minute |

AUCH! We are already down from 1,000 concurrent players to only 5.6 suitable players per minute, and this is while looking at only the most basic of assumptions...

For perfect matchmaking I would want to split players further. A common request from Awesomenauts players is to have unranked and ranked multiplayer. If we would add this, the split between these modes would probably not be equal: one mode would likely get more players than the other. Let's assume one third of all players would play one mode, and two thirds would play the other mode. We need to split further from the already small numbers we have because even in unranked matchmaking we still want to match players based on similar skill to create fun matches. This is how few players we would have left in the smallest of the two modes:

| 5.6 / 3 = 1.9 players per minute |

Another common request from the Awesomenauts community is to split pre-mades from solo-queuers. A pre-made is a group of people who form a team by hand in the lobby before the match, while solo-queuers are put together in a team with complete strangers by the matchmaker. Pre-mades potentially have a big advantage because they will likely do much better teamwork. In the ideal case pre-mades would therefore only play against other pre-mades. How many pre-mades there are varies wildly with the skill level of the players (highly skilled players generally play in pre-mades much more). Let's assume that on average one fourth of all players are in a pre-made:

| 1.9 / 4 = 0.46 players per minute |

Since we are talking about perfect matchmaking, let's have another look at our skill-based matchmaking. Above I assumed that one third of all players is a good enough match in skill. In reality the top players are way too much better than the rest to make this ideal. The top 5% of players are an enormous amount better than the top 33% of players. The more precisely we could match based on skill, the better. I think we would need to do at least three times better than we did above for ideal matchmaking:

| 0.46 / 3 = 0.15 players per minute |

I can imagine some more criteria for ideal matchmaking (like supporting more game modes in matchmaking), but I think the point is quite clear already. With 1,000 concurrent players it will take 30 minutes to fill a match! Obviously this is totally unacceptable. Here's a summary of all the criteria I have mentioned so far:

Let's say it is fine to let players wait for two minutes for a match to fill up, bringing us to 0.3 suitable players during the available time for matchmaking. To bring us to the required 6 we would therefore need 6 / 0.3 = 19 times as many concurrent players for good matchmaking. We started with 1,000, so we need 19,000 concurrent players. Of course there is a big daily fluctuation in the number of players (there are fewer players deep in the night and early in the morning), so to also have good matchmaking at the slow hours we would need three times more players still. Thus we would need 58,000 concurrent players at peak for good matchmaking. That probably equals over 5 million unique players each month. Holy cow that is a lot!

When building a multiplayer game it is important to think about this. Ideal matchmaking requires enormous player counts, and if your matchmaking is built assuming such player counts will be there you might make something that works really badly for more realistic numbers. Therefore the ideal matchmaking system is flexible: it brings perfect matchmaking when there are tons of players but also makes the best of a small player count.

Despite all of this we can still make big improvements to the matchmaking system in Awesomenauts. We are aware of this and are therefore rebuilding the entire matchmaking system from the ground up in a much smarter and much more flexible way than is currently in the game. I am sure this will bring a big improvement, but at the same time it is important to have realistic expectations: no matter how well we build our matchmaking, the player count required for 'perfect' matchmaking is unrealistic for all but the few most successful games in the world.

Saturday, 8 November 2014

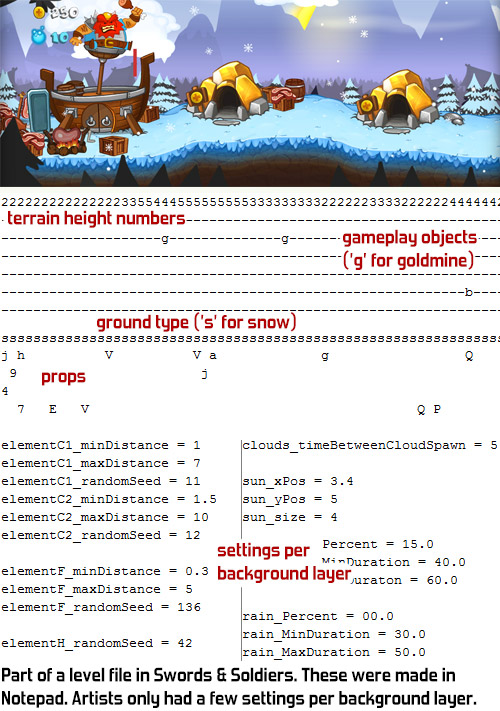

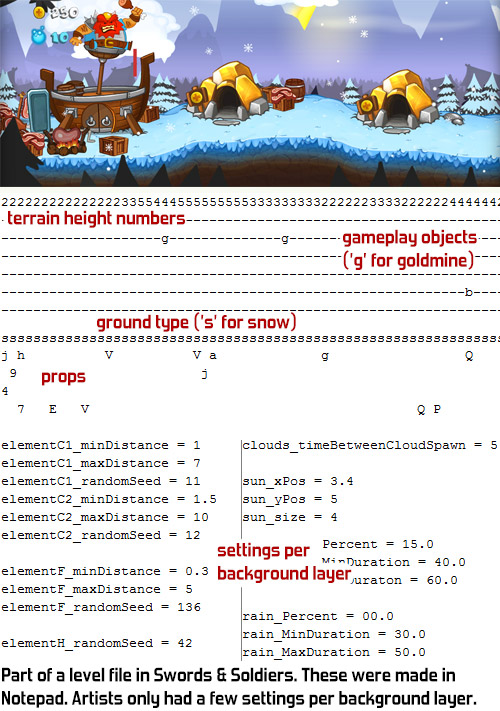

Using 2D daylight assets to create a night level

Changing the lighting in hand-drawn art is a challenge. While in 3D games you can simply modify the light, there is no such thing when using 2D assets. In our new game Swords & Soldiers II all the level assets have their lighting drawn in. Of course you can ask the artist the redraw the image with different lighting, but that costs a lot of additional time and texture memory. We managed to re-use our daylight textures to create convincing nighttime levels, so today I would like to explain the tricks used to achieve that goal.

Today's post is all about the artistry of Ronimo artist Ralph Rademakers. He uses images drawn by Adam Daroszewski* and Gijs Hermans to decorate the levels in Swords & Soldiers II. Ralph keeps surprising me with the unexpected tricks he manages to pull off with our internal tools.

*By the way, note that Adam works as a freelancer these days. He drew most of the level props in this post and you can see some more of his amazing work here.

There is basically a whole series of tricks that Ralph uses to turn day into night. I'll let the images do most of the talking today:

Even in the night a form of atmospheric perspective can be used: in the video below the smoke in the foreground is much brighter than the smoke in the background to suggest additional depth. When I built the recolouring shaders and tools I never expected them to be used on almost every object in the levels. Also, note how the stars have been made using particles to make them blink and appear in random places for a more lively look.

As a starting point Ralph usually chooses one colour multiplier for all objects in the level. He tweaks the colour per object to make it look exactly right. In the end few objects use the same colour multiplier, but they all started from the same point.

It surprises me how often these gradients are reused. From fog to lights to lens-flares and even just for hiding objects that should not be visible from certain viewpoints. The big downside of this approach is that it results in a lot of overdraw, which is also the main performance bottleneck in Awesomenauts. Good thing modern videocards are so insanely fast...

The lights in the bar below blink to make the scene more dynamic. However, blinking lights attract the eye too much while the focus should be on the gameplay. Therefore the blinking is not between on and off, but between the more subtle slightly-bright and extra-bright. Also note how the blinking lights illuminate the barmaid's arm.

This bar is also a great example of Ralph adding tons of little animating details, like the drunk guy on the roof. Many of those details will likely not be seen by most players, but the total effect of having such a detailed world is very strong, even for players who don't look at it specifically.

As a programmer when thinking about lighting in 2D games I immediately start considering technical solutions. I would look at things like normal maps (very possible in 2D) or automatic rim lighting. However, more creative, art-driven solutions often work much better, especially when creating a visual style with such a painterly look as in Swords & Soldiers II. The simple yet clever techniques Ralph used to create this nighttime level are a great example of this.

Today's post is all about the artistry of Ronimo artist Ralph Rademakers. He uses images drawn by Adam Daroszewski* and Gijs Hermans to decorate the levels in Swords & Soldiers II. Ralph keeps surprising me with the unexpected tricks he manages to pull off with our internal tools.

*By the way, note that Adam works as a freelancer these days. He drew most of the level props in this post and you can see some more of his amazing work here.

There is basically a whole series of tricks that Ralph uses to turn day into night. I'll let the images do most of the talking today:

Even in the night a form of atmospheric perspective can be used: in the video below the smoke in the foreground is much brighter than the smoke in the background to suggest additional depth. When I built the recolouring shaders and tools I never expected them to be used on almost every object in the levels. Also, note how the stars have been made using particles to make them blink and appear in random places for a more lively look.

As a starting point Ralph usually chooses one colour multiplier for all objects in the level. He tweaks the colour per object to make it look exactly right. In the end few objects use the same colour multiplier, but they all started from the same point.

It surprises me how often these gradients are reused. From fog to lights to lens-flares and even just for hiding objects that should not be visible from certain viewpoints. The big downside of this approach is that it results in a lot of overdraw, which is also the main performance bottleneck in Awesomenauts. Good thing modern videocards are so insanely fast...

The lights in the bar below blink to make the scene more dynamic. However, blinking lights attract the eye too much while the focus should be on the gameplay. Therefore the blinking is not between on and off, but between the more subtle slightly-bright and extra-bright. Also note how the blinking lights illuminate the barmaid's arm.

This bar is also a great example of Ralph adding tons of little animating details, like the drunk guy on the roof. Many of those details will likely not be seen by most players, but the total effect of having such a detailed world is very strong, even for players who don't look at it specifically.

As a programmer when thinking about lighting in 2D games I immediately start considering technical solutions. I would look at things like normal maps (very possible in 2D) or automatic rim lighting. However, more creative, art-driven solutions often work much better, especially when creating a visual style with such a painterly look as in Swords & Soldiers II. The simple yet clever techniques Ralph used to create this nighttime level are a great example of this.

Saturday, 25 October 2014

Proun+ gets its first trailer!

We have released the first trailer for Proun+, the biggerbetter Proun that is coming to 3DS, iOS and Android! Proun+ is being made together with Engine Software and will have six new tracks and a completely new soundtrack: more songs and all the songs have now been recorded by real musicians for a much better sound. In this trailer you can hear the new version of one of the old songs and see one of the awesome new levels in action, plus footage from some of the original tracks. I am really hyped for the return of Proun, so I hope you like it!

Saturday, 18 October 2014

Area based depth of field blur

While working on the visual style for my weird live performance game Cello Fortress I came up with a new technique for depth of field blur: area based depth of field blur. As far as I know this is not an existing technique so today I would like to explain how it works.

The visual style for Cello Fortress is far from finished at the moment, but I decided early on that I wanted strong depth of field blur to play a major role. I had already implemented depth of field blur for Proun (which by the way is coming to 3DS, iOS and Android soon!) and I copied that to Cello Fortress. Proun does not have blur on the foreground, so I added that and tried it in-game. The result turned out to not work at all, as you can see in this image (be sure to click the image for a larger version, since this is difficult to see in these small blog-sized images):

click for larger image

The problem is that with standard depth of field blur, only one specific distance to the camera is sharp. In Cello Fortress the camera looks down on the battlefield diagonally, which means that that specific distance looks like a circle. Even weirder is that the part of the screen that is closest to the camera is almost at the centre of the screen: that is the spot the camera hangs exactly above. The result is that both the edges of the screen and the centre are blurred. Of course it is totally undesired that the centre of the gameplay would be blurry.

A simple solution I then tried was to broaden the area that is sharp:

click for larger image

click for larger image

The goal was to have strong depth of field blur as a core part of the visual style for Cello Fortress. So far we either have depth of field blur that interferes with the gameplay, or no depth of field blur at all in the foreground. We need something better:

Once I drew where I wanted blur I realised that this is a simple shape: in world space this is just a simple axis-aligned box. So I implemented a shader that calculates the strength of the depth of field blur based on its distance to that 3D box, instead of distance to the camera. The result is exactly what I was looking for:

Click for larger image. Note that the effect is exaggerated in the smaller screenshot above, the full-res version has the normal, slightly less extreme blur.

A big benefit of this technique is that tweaking it is really straightforward, and that it is independent on the camera. I can easily set the sharp area from code based on the gameplay situation. It also gives me precise control over the height at which the blur starts.

Technically area based blur is quite easy to do. The depth of field blur shader already uses a render-texture that contains the depth of each pixel to the camera. I don't use this depth for anything else, so I can put any value I like there. The pixel shader can easily be adjusted to calculate depth in a different way.

This concept can also be applied to other shapes than boxes. For example, you can also have a sphere within which everything is sharp, while everything outside that sphere is blurred. One can even have several such spheres. How about a visual style where everything is blurred except for the areas around the characters? I think some other interesting visual styles can be made this way, especially in combination with very strong blur.

I have seen some games that use non-distance based depth of field blur, like the beautiful Below, which seems to simply blur the top and bottom of the screen. I am not aware of games that use a 3D area as I use it. Let me know if there are other games that already do this.

The fun of writing your own shaders is that you can bend them to do whatever you want, including weird and unrealistic effects like doing my depth of field blur based on a 3D box area. Feel free to use this idea in your own games, and I'd love to hear from you if try it out!

The visual style for Cello Fortress is far from finished at the moment, but I decided early on that I wanted strong depth of field blur to play a major role. I had already implemented depth of field blur for Proun (which by the way is coming to 3DS, iOS and Android soon!) and I copied that to Cello Fortress. Proun does not have blur on the foreground, so I added that and tried it in-game. The result turned out to not work at all, as you can see in this image (be sure to click the image for a larger version, since this is difficult to see in these small blog-sized images):

click for larger image

The problem is that with standard depth of field blur, only one specific distance to the camera is sharp. In Cello Fortress the camera looks down on the battlefield diagonally, which means that that specific distance looks like a circle. Even weirder is that the part of the screen that is closest to the camera is almost at the centre of the screen: that is the spot the camera hangs exactly above. The result is that both the edges of the screen and the centre are blurred. Of course it is totally undesired that the centre of the gameplay would be blurry.

A simple solution I then tried was to broaden the area that is sharp:

click for larger image

click for larger image

The goal was to have strong depth of field blur as a core part of the visual style for Cello Fortress. So far we either have depth of field blur that interferes with the gameplay, or no depth of field blur at all in the foreground. We need something better:

Once I drew where I wanted blur I realised that this is a simple shape: in world space this is just a simple axis-aligned box. So I implemented a shader that calculates the strength of the depth of field blur based on its distance to that 3D box, instead of distance to the camera. The result is exactly what I was looking for:

Click for larger image. Note that the effect is exaggerated in the smaller screenshot above, the full-res version has the normal, slightly less extreme blur.

A big benefit of this technique is that tweaking it is really straightforward, and that it is independent on the camera. I can easily set the sharp area from code based on the gameplay situation. It also gives me precise control over the height at which the blur starts.

Technically area based blur is quite easy to do. The depth of field blur shader already uses a render-texture that contains the depth of each pixel to the camera. I don't use this depth for anything else, so I can put any value I like there. The pixel shader can easily be adjusted to calculate depth in a different way.

This concept can also be applied to other shapes than boxes. For example, you can also have a sphere within which everything is sharp, while everything outside that sphere is blurred. One can even have several such spheres. How about a visual style where everything is blurred except for the areas around the characters? I think some other interesting visual styles can be made this way, especially in combination with very strong blur.

I have seen some games that use non-distance based depth of field blur, like the beautiful Below, which seems to simply blur the top and bottom of the screen. I am not aware of games that use a 3D area as I use it. Let me know if there are other games that already do this.

The fun of writing your own shaders is that you can bend them to do whatever you want, including weird and unrealistic effects like doing my depth of field blur based on a 3D box area. Feel free to use this idea in your own games, and I'd love to hear from you if try it out!

Sunday, 12 October 2014

Other developers on the ideal patching frequency

Last week I wrote a blogpost about how we think it is better to have a bigger patch once every one or two months than to have really small weekly patches. I was curious what other developers' experiences with this are, so I asked around for more opinions on this. Since patching on console and mobile is so different I looked for developers who have a game on Steam, which is the platform that enables regular patching best.

Someone also pointed me to the Valve talk where they explained the importance of communication around patches and how this helped them grow Team Fortress 2. This is an incredibly interesting talk, so be sure to check it out:

Here are the replies I got from fellow developers:

Jamie Cheng from Klei

developer of Don't Starve

"While I have lots of opinions, I think it comes down to "it depends". Personally I think many devs try to do it the Valve Way only to find they don't really, truly understand why it works for Valve."

Mark Morris from Introversion

developer of Prison Architect (currently in Early Access)

"I guess the update frequency is linked quite closely to the style of development. For us, we wanted to produce quite meaty chunks (the hope being that we would get press coverage for individual updates). A month gave us enough time to develop decent additions, but was at a frequency that would still be engaging for the players. I could see weekly working, but I think that each update would be a lot less polished and would have a much more iterative development feel about it."

Kimiko from Berserk Games

developer of Tabletop Simulator (currently in Early Access)

"I think it would also depend on the game. For us in Early Access, we are one of those who patch every week or 2 weeks. Our game is a different spectrum compared to ones with stories, weapons, & what not. So patching each week works well for us. We're only doing this for Early Access though or for "bigger" updates, we'll spread it out a bit, like when we add in Oculus Rift or some of our other bigger stretch goals. Once we are out of Early Access, then we'll spread our updates out every month or so."

"There's only two of us and we work well putting out our patches each week, because it's usually about tweaking things, fixing bugs and implementing a feature. TTS is more for people to create their own games, so for us, it's better to get things out to help our users out more. Once we feel TTS is at the point where the community has the tools they need to create their own games to their fullest abilities, then we'd go out of Early Access and we wouldn't need to update every week anymore. But my point is, is that we'll still update after Early Access, but we won't have that greater need to do it each week like we do now, because it's at that "completed" stage and we'd focus on the bigger picture of adding in the bigger things from our Kickstarter stretch goals, and find ways to improve it in general."

"I think we probably spoiled our community because of our weekly updates and who knows what the uproar will be if we switch to monthly, but it's probably something we'd do gradually and we'd let our community know since we're pretty transparent. [...] As long as we keep our community in the loop, that's most important."

Pete Angstadt from Turtle Sandbox

developer of Cannon Brawl (recently released out of Early Access)

"I think the regularity of update scheduling, whether it's a week or a month is probably the most important part. That and communicating the schedule to your audience. [...] Vlambeer has a weekly schedule which they communicate to players with their livestream. Other games I think have done 'in-game' countdown timers to the next patch, or at least had the latest patch notes visible in game. If we had to do it over again, I would have gone with a very regular monthly patching schedule and included patch notes in game."

Roel Ezendam from Ragesquid

developer of Action Henk (currently in Early Access)

"We recently switched from bi-weekly to monthly updates for our Early Access game Action Henk. The biggest improvement that we have noticed is that we have far less overhead that's being spent on finishing and releasing the update. With a short update cycle the game constantly needs to be in a stable state, which makes it harder to work on larger features or a big overhaul. It also just takes time to publish the update and check if everything is working properly. These bi-weekly updates basically put us in a constant state of crunch, whereas the monthly updates give us a bit more breathing room."

Erik Johnson from Arcen Games

developer of AI War: Fleet Command and The Last Federation

"We've found that patching often isn't a bad thing if supporting your core community members (in effort to expand your core community) is your aim. [...] Anyway, I agree that this isn't a solid way to market the game in the main, but that's where our larger, more content-focused updates and DLC releases come in. I've found you can't tap the well too many times no matter how you slice it (unless you're in that fortunate position to have a sizable audience just begging for more) as far as reaching out to new players -- but a consistent stream of smaller updates keeps the community you already have established active and growing. That said, we do find that even our most popular games occasionally hit periods of patch ennui for both our community and our development team. :)"

Josef Vorbeck from Chasing Carrots

developer of Cosmonautica (currently in Early Access)

"We update Cosmonautica in 2 week cycles. We even did this before Early Access, because it's our standard rhythm with two week sprints. And we think, that we can deliver enough in two weeks to make these updates meaningful. Also we think that it's crucial for an Early Access title, that the players see constant progress during the development. These days Early Access has a mediocre reputation, because some devs are leaving their games or decide their games are finished, even though more features were planned. With our update cycles and constant communication we're trying to earn the trust of the players. It just works for us, the response so far is really great. And of course it's important for us to get the valuable feedback from our players on a regular basis. But after our final release we might switch our update rhythm to aim for larger content updates which deliver their own story, as Joost stated."

While digging for opinions I also got a replay on Gamedev.net from a user named Orymus3 who wrote a really interesting remark on the term "patch" itself:

"I would avoid using the term 'patch'. To most people this suggests you are fixing bugs, which also infers you develop bugs. What you really want to put forward is the fact you're actively working in the game, and are releasing new content. 'Content Push', 'Release', etc. are all better terms to refer to what you're doing. This may not appear like much, but imagine that a player comes across a post about your game (it's the only thing he's ever seen) and he sees 'Patch'. He's likely to think: here's another incomplete Beta filled with bugs, I'll give this a pass."

Someone also pointed me to the Valve talk where they explained the importance of communication around patches and how this helped them grow Team Fortress 2. This is an incredibly interesting talk, so be sure to check it out:

Here are the replies I got from fellow developers:

Jamie Cheng from Klei

developer of Don't Starve

"While I have lots of opinions, I think it comes down to "it depends". Personally I think many devs try to do it the Valve Way only to find they don't really, truly understand why it works for Valve."

Mark Morris from Introversion

developer of Prison Architect (currently in Early Access)

"I guess the update frequency is linked quite closely to the style of development. For us, we wanted to produce quite meaty chunks (the hope being that we would get press coverage for individual updates). A month gave us enough time to develop decent additions, but was at a frequency that would still be engaging for the players. I could see weekly working, but I think that each update would be a lot less polished and would have a much more iterative development feel about it."

Kimiko from Berserk Games

developer of Tabletop Simulator (currently in Early Access)

"I think it would also depend on the game. For us in Early Access, we are one of those who patch every week or 2 weeks. Our game is a different spectrum compared to ones with stories, weapons, & what not. So patching each week works well for us. We're only doing this for Early Access though or for "bigger" updates, we'll spread it out a bit, like when we add in Oculus Rift or some of our other bigger stretch goals. Once we are out of Early Access, then we'll spread our updates out every month or so."

"There's only two of us and we work well putting out our patches each week, because it's usually about tweaking things, fixing bugs and implementing a feature. TTS is more for people to create their own games, so for us, it's better to get things out to help our users out more. Once we feel TTS is at the point where the community has the tools they need to create their own games to their fullest abilities, then we'd go out of Early Access and we wouldn't need to update every week anymore. But my point is, is that we'll still update after Early Access, but we won't have that greater need to do it each week like we do now, because it's at that "completed" stage and we'd focus on the bigger picture of adding in the bigger things from our Kickstarter stretch goals, and find ways to improve it in general."

"I think we probably spoiled our community because of our weekly updates and who knows what the uproar will be if we switch to monthly, but it's probably something we'd do gradually and we'd let our community know since we're pretty transparent. [...] As long as we keep our community in the loop, that's most important."

Pete Angstadt from Turtle Sandbox

developer of Cannon Brawl (recently released out of Early Access)

"I think the regularity of update scheduling, whether it's a week or a month is probably the most important part. That and communicating the schedule to your audience. [...] Vlambeer has a weekly schedule which they communicate to players with their livestream. Other games I think have done 'in-game' countdown timers to the next patch, or at least had the latest patch notes visible in game. If we had to do it over again, I would have gone with a very regular monthly patching schedule and included patch notes in game."

Roel Ezendam from Ragesquid

developer of Action Henk (currently in Early Access)

"We recently switched from bi-weekly to monthly updates for our Early Access game Action Henk. The biggest improvement that we have noticed is that we have far less overhead that's being spent on finishing and releasing the update. With a short update cycle the game constantly needs to be in a stable state, which makes it harder to work on larger features or a big overhaul. It also just takes time to publish the update and check if everything is working properly. These bi-weekly updates basically put us in a constant state of crunch, whereas the monthly updates give us a bit more breathing room."

Erik Johnson from Arcen Games

developer of AI War: Fleet Command and The Last Federation

"We've found that patching often isn't a bad thing if supporting your core community members (in effort to expand your core community) is your aim. [...] Anyway, I agree that this isn't a solid way to market the game in the main, but that's where our larger, more content-focused updates and DLC releases come in. I've found you can't tap the well too many times no matter how you slice it (unless you're in that fortunate position to have a sizable audience just begging for more) as far as reaching out to new players -- but a consistent stream of smaller updates keeps the community you already have established active and growing. That said, we do find that even our most popular games occasionally hit periods of patch ennui for both our community and our development team. :)"

Josef Vorbeck from Chasing Carrots

developer of Cosmonautica (currently in Early Access)

"We update Cosmonautica in 2 week cycles. We even did this before Early Access, because it's our standard rhythm with two week sprints. And we think, that we can deliver enough in two weeks to make these updates meaningful. Also we think that it's crucial for an Early Access title, that the players see constant progress during the development. These days Early Access has a mediocre reputation, because some devs are leaving their games or decide their games are finished, even though more features were planned. With our update cycles and constant communication we're trying to earn the trust of the players. It just works for us, the response so far is really great. And of course it's important for us to get the valuable feedback from our players on a regular basis. But after our final release we might switch our update rhythm to aim for larger content updates which deliver their own story, as Joost stated."

While digging for opinions I also got a replay on Gamedev.net from a user named Orymus3 who wrote a really interesting remark on the term "patch" itself:

"I would avoid using the term 'patch'. To most people this suggests you are fixing bugs, which also infers you develop bugs. What you really want to put forward is the fact you're actively working in the game, and are releasing new content. 'Content Push', 'Release', etc. are all better terms to refer to what you're doing. This may not appear like much, but imagine that a player comes across a post about your game (it's the only thing he's ever seen) and he sees 'Patch'. He's likely to think: here's another incomplete Beta filled with bugs, I'll give this a pass."

Sunday, 5 October 2014

Why patching too often is a bad idea / The magic of the Vault

Recently I have seen quite a few games that launched with the plan to patch really often, especially Early Access games. Most of those games patch once a week or once every two weeks. This may seem like a good idea: iterate quickly and show clearly to the user that you really do the best you can to make good on the promise of improving the game. For a time with Awesomenauts we also tried to patch as often as possible, but by now we think that is actually a bad idea.

The main reason for this is that the more often you patch, the smaller the patches are. Lots of small patches means lots of hardly noticeable changes to the game. Why would a user come back to your game because of 5 balance tweaks, 3 bug fixes and improved graphics for 2 weapons? Why would a regular player be exited about this? The changes done in weekly patches are just too small to make an impact.

Combining a bunch of those smaller patches together creates something much more noteworthy. In a bigger patch once every one or two months you could overhaul the graphics of all the weapons instead of just two, do dozens of balance tweaks and fix a ton of bugs. Simply combining all the changes from a bunch of micro-patches into one bigger patch turns it from a bunch of uninteresting patches to one exciting patch that really improves the game significantly.

An important note here is that I am NOT suggesting that development should be slowed down. On the contrary: keep improving and extending that game as much as you can, just like we try to do with Awesomenauts! I am only suggesting to group the changes and release them together.

The goal of doing a games-as-a-service model where you are constantly improving the game is not only to improve the game, but also to excite the players. Keep them playing longer, bring back players who stopped playing and get new players. To excite players you need stories. Not literal stories, but things that players can discuss with their friends. Bigger patches are much more interesting to talk about.

Having bigger patches also allows you to create new 'stories' without additional development. You can give a patch a name and a theme simply by looking at the changes and finding some similarities. This way you can create a new story for the patch itself and make it feel like a big event.

Since December we have been doing active marketing for each Awesomenauts patch. We announce a patch beforehand and slowly reveal information about it. We have made a special website for this: Voltar's Vault.

A couple of weeks before a patch comes we open the Vault. At that moment it contains only locks. Then in the weeks up to the patch we gradually release more info on what is going to be in it. Usually we first give a vague hint, then a couple of days later we announce what it really is. This pushes anticipation for our patches enormously. Our community creates a Vault topic on our forum for every patch and posts hundreds of pages of discussion there, as you can see in this topic for patch 2.7. The more interesting the features are and the more suggestive (but cryptic!) the hints are, the crazier it gets.

The result is that we can create crazy hype for every patch and get big peaks in our playerbase whenever we release a patch. The day a patch launches we have twice as many players as on a normal day. Sometimes we can even create significant sales bumps ourselves. Those are small compared to the bump of a Steam sale, but Steam sales require getting lucky with Valve to get a slot. We can create sales peaks through hyped patches ourselves.

By increasing the size of the patch and adding a name, theme, Vault website and hints we can create real stories, worthy of discussing with your friends. In some cases even worthy to be picked up by press: a couple of big news websites (including Destructoid) often write about our patches. This only works because there is enough time in between patches to actually hype them, and because patches are big enough to make this worthwhile.

Note that if you look at our patch notes you might get a different impression. We do many more patches than I describe here. The reason for this is that we distinguish between major patches and hotfixes. A hotfix is a small patch that only fixes some immediate issues. Obvious bugs or even crashes should not be left in the game for a month, so we don't bunch up fixes for game breaking issues. Hotfixes generally don't add anything new: they just fix a broken game experience.

We started bunching features into bigger patches after we heard about how Valve went the same road with Team Fortress 2. Apparently they did weekly patches for quite a while, sometimes doing even two patches a week. They claim to have much more success with the patches since they bunch them up and give them a name and a theme. I tried finding the article where I read this but couldn't find it. Regardless, have a look at Team Fortress 2's patching history and you will see that Valve creates big patches with some time between (plus apparently a lot of small tweaking patches).

So far this post has mostly been about marketing. There is of course also a practical side to patching and continuous development. When we want to do big changes or add complex new features to Awesomenauts we need time to balance and tweak those. A big portion of that is done through semi-public betas on Steam. When we add a new character we need several weeks of beta to get his balance right for launch. This workflow is not very compatible with weekly patches.

Also, focussing on having something new ready every week probably requires incredibly short and focussed development cycles. This might sound good but I doubt production can be this focussed permanently for a year or more without burning developers out. Doing weekly patches requires really good management to keep it going and likely adds constant pressure on the dev team.

In the end patches are also a form of marketing: they need to create stories. Not literal stories, but things that are worthwhile to talk about. Things that press can pick up on, things that players can discuss.

PS: here's the follow-up blogpost to this one: Other developers on the ideal patching frequency

The main reason for this is that the more often you patch, the smaller the patches are. Lots of small patches means lots of hardly noticeable changes to the game. Why would a user come back to your game because of 5 balance tweaks, 3 bug fixes and improved graphics for 2 weapons? Why would a regular player be exited about this? The changes done in weekly patches are just too small to make an impact.

Combining a bunch of those smaller patches together creates something much more noteworthy. In a bigger patch once every one or two months you could overhaul the graphics of all the weapons instead of just two, do dozens of balance tweaks and fix a ton of bugs. Simply combining all the changes from a bunch of micro-patches into one bigger patch turns it from a bunch of uninteresting patches to one exciting patch that really improves the game significantly.

An important note here is that I am NOT suggesting that development should be slowed down. On the contrary: keep improving and extending that game as much as you can, just like we try to do with Awesomenauts! I am only suggesting to group the changes and release them together.

The goal of doing a games-as-a-service model where you are constantly improving the game is not only to improve the game, but also to excite the players. Keep them playing longer, bring back players who stopped playing and get new players. To excite players you need stories. Not literal stories, but things that players can discuss with their friends. Bigger patches are much more interesting to talk about.

Having bigger patches also allows you to create new 'stories' without additional development. You can give a patch a name and a theme simply by looking at the changes and finding some similarities. This way you can create a new story for the patch itself and make it feel like a big event.

Since December we have been doing active marketing for each Awesomenauts patch. We announce a patch beforehand and slowly reveal information about it. We have made a special website for this: Voltar's Vault.

A couple of weeks before a patch comes we open the Vault. At that moment it contains only locks. Then in the weeks up to the patch we gradually release more info on what is going to be in it. Usually we first give a vague hint, then a couple of days later we announce what it really is. This pushes anticipation for our patches enormously. Our community creates a Vault topic on our forum for every patch and posts hundreds of pages of discussion there, as you can see in this topic for patch 2.7. The more interesting the features are and the more suggestive (but cryptic!) the hints are, the crazier it gets.

The result is that we can create crazy hype for every patch and get big peaks in our playerbase whenever we release a patch. The day a patch launches we have twice as many players as on a normal day. Sometimes we can even create significant sales bumps ourselves. Those are small compared to the bump of a Steam sale, but Steam sales require getting lucky with Valve to get a slot. We can create sales peaks through hyped patches ourselves.

By increasing the size of the patch and adding a name, theme, Vault website and hints we can create real stories, worthy of discussing with your friends. In some cases even worthy to be picked up by press: a couple of big news websites (including Destructoid) often write about our patches. This only works because there is enough time in between patches to actually hype them, and because patches are big enough to make this worthwhile.

Note that if you look at our patch notes you might get a different impression. We do many more patches than I describe here. The reason for this is that we distinguish between major patches and hotfixes. A hotfix is a small patch that only fixes some immediate issues. Obvious bugs or even crashes should not be left in the game for a month, so we don't bunch up fixes for game breaking issues. Hotfixes generally don't add anything new: they just fix a broken game experience.

We started bunching features into bigger patches after we heard about how Valve went the same road with Team Fortress 2. Apparently they did weekly patches for quite a while, sometimes doing even two patches a week. They claim to have much more success with the patches since they bunch them up and give them a name and a theme. I tried finding the article where I read this but couldn't find it. Regardless, have a look at Team Fortress 2's patching history and you will see that Valve creates big patches with some time between (plus apparently a lot of small tweaking patches).

So far this post has mostly been about marketing. There is of course also a practical side to patching and continuous development. When we want to do big changes or add complex new features to Awesomenauts we need time to balance and tweak those. A big portion of that is done through semi-public betas on Steam. When we add a new character we need several weeks of beta to get his balance right for launch. This workflow is not very compatible with weekly patches.

Also, focussing on having something new ready every week probably requires incredibly short and focussed development cycles. This might sound good but I doubt production can be this focussed permanently for a year or more without burning developers out. Doing weekly patches requires really good management to keep it going and likely adds constant pressure on the dev team.

In the end patches are also a form of marketing: they need to create stories. Not literal stories, but things that are worthwhile to talk about. Things that press can pick up on, things that players can discuss.

PS: here's the follow-up blogpost to this one: Other developers on the ideal patching frequency

Sunday, 21 September 2014

Relay servers

Last week I discussed the core network structures for games. There is one really important topic that I left out then: relay servers. Relay servers are especially important to understand since I have recently heard them confused with dedicated servers quite often. Today I would like to explain what relay servers are, and what they are not.

A relay server is essentially just a computer that sends and receives packets. It does not really process data and does not do any gameplay logic. All it does is that if player A sends a packet to player B, then instead of sending it directly player A sends it to the relay server. The relay server then sends it to player B. The relay server is essentially just a glorified router.

So why is this useful? Relay servers have two big advantages. The first is that players can practically always connect to them. Security measures in routers are a big problem in internet connections, causing many users to not be able to connect to each other directly. Usually this can be solved in the router settings by setting UPNP or port forwarding, but many users don't know how to do this. Techniques like NAT punch-through help, but still don't solve the problem in a lot of cases.

An important aspect of connectivity issues is that if one of the two computers that try to connect to each other is set up entirely right, then it is almost always possible to connect the two computers, no matter how badly the other computer is set up. This is where relay servers come in: the developer manages those and can thus make sure they are set up optimally. So even if two players cannot connect to each other directly, it is extremely likely that they can both connect to the relay server and send packets to each other through that.

The other big benefit of relay servers is that they can massively reduce packet count, especially in peer to peer situations. As I explained in a previous blogpost, packet count is an important factor in connection quality.

The internet does not allow multicasting, so if you want to send the same message to several other players, then you just need to send it several times. A relay server can work around this. Whenever a player wants to send to all other players, she sends only one packet to the relay server. The relay server then copies the packet and sends it to each client. If several players are all sending to the same player the relay server can also combine their packets into one bigger packet. These features greatly reduce packet count and bandwidth in a peer to peer situation, or for the host in a situation where a player is the host. This way relay servers theoretically make it possible to have a peer to peer game without dedicated servers.

Note that dedicated servers have these exact same benefits. Players can practically always connect to them and players only have to send packets to the dedicated server instead of to all other players. For this reason there is normally no point to having relay servers if you already have dedicated servers.

A big downside to relay servers is that sending all data through the relay server adds a little bit of latency to the connection. This is especially problematic if players from several continents are playing together in one match. You might think intercontinental play should never automatically happen, but you need thousands of simultaneous players to always avoid this. Even then international friends might send each other invites.

Let's say we have a match with four European players and two Australian players. The relay server for this match is in Europe. The connection between the Europeans will likely be a little bit slower but still fine because the relay server is close. The connection between a European player and an Australian player will also not be affected too much, because the data needs to be sent that far anyway. The problem happens between the two Australian players: since everything does through the relay server, their traffic now goes through Europe instead of directly, massively increasing their ping!

For this reason I would never want to use rigid relay servers for a game where latency matters. If players can connect directly and have a fast enough connection to handle the packet count, then it is probably faster to let them communicate directly. This also saves on the cost of running expensive relay servers. I think relay servers are mostly useful as a last resort for players who otherwise cannot connect at all, and for players whose internet is too slow for the number of players they need to send to.

Awesomenauts currently does not have relay servers. Instead it solves the problem of two players not being able to connect directly by sending through one of the other players. This often works fine but it is an imperfect solution: it increases the burden on that player's connection. Also, in extremely rare cases a player cannot connect to anyone in the match.

We are currently putting a lot of effort into improving the connection quality for players in Awesomenauts. Relay servers are a feature we are considering for the future, but right now we think we can gain more by improving matchmaking first. We are writing a completely new matchmaking system that will allow us to match players better based on their connection and location. Better matchmaking also brings many other benefits that are unrelated to connection quality. A big recent improvement is that we managed to halve (!) the average bandwidth and packet count used by Awesomenauts. In the long run we will need to research relay servers further to know how beneficial their trade-of of connection quality versus latency would really be.

To summarize I would like to stress that relay servers are not the same as dedicated servers. Relay servers are a tool for reducing bandwidth and packet count and for improving connection quality, potentially at the cost of latency.

Note: I have edited this post on 27-9-2014 to remove references to the Photon network library. It turned out they had added new features that I was not aware of and that made my analysis of what Photon offers incorrect and irrelevant to this post.

A relay server is essentially just a computer that sends and receives packets. It does not really process data and does not do any gameplay logic. All it does is that if player A sends a packet to player B, then instead of sending it directly player A sends it to the relay server. The relay server then sends it to player B. The relay server is essentially just a glorified router.

So why is this useful? Relay servers have two big advantages. The first is that players can practically always connect to them. Security measures in routers are a big problem in internet connections, causing many users to not be able to connect to each other directly. Usually this can be solved in the router settings by setting UPNP or port forwarding, but many users don't know how to do this. Techniques like NAT punch-through help, but still don't solve the problem in a lot of cases.

An important aspect of connectivity issues is that if one of the two computers that try to connect to each other is set up entirely right, then it is almost always possible to connect the two computers, no matter how badly the other computer is set up. This is where relay servers come in: the developer manages those and can thus make sure they are set up optimally. So even if two players cannot connect to each other directly, it is extremely likely that they can both connect to the relay server and send packets to each other through that.

The other big benefit of relay servers is that they can massively reduce packet count, especially in peer to peer situations. As I explained in a previous blogpost, packet count is an important factor in connection quality.

The internet does not allow multicasting, so if you want to send the same message to several other players, then you just need to send it several times. A relay server can work around this. Whenever a player wants to send to all other players, she sends only one packet to the relay server. The relay server then copies the packet and sends it to each client. If several players are all sending to the same player the relay server can also combine their packets into one bigger packet. These features greatly reduce packet count and bandwidth in a peer to peer situation, or for the host in a situation where a player is the host. This way relay servers theoretically make it possible to have a peer to peer game without dedicated servers.

Note that dedicated servers have these exact same benefits. Players can practically always connect to them and players only have to send packets to the dedicated server instead of to all other players. For this reason there is normally no point to having relay servers if you already have dedicated servers.

A big downside to relay servers is that sending all data through the relay server adds a little bit of latency to the connection. This is especially problematic if players from several continents are playing together in one match. You might think intercontinental play should never automatically happen, but you need thousands of simultaneous players to always avoid this. Even then international friends might send each other invites.

Let's say we have a match with four European players and two Australian players. The relay server for this match is in Europe. The connection between the Europeans will likely be a little bit slower but still fine because the relay server is close. The connection between a European player and an Australian player will also not be affected too much, because the data needs to be sent that far anyway. The problem happens between the two Australian players: since everything does through the relay server, their traffic now goes through Europe instead of directly, massively increasing their ping!

For this reason I would never want to use rigid relay servers for a game where latency matters. If players can connect directly and have a fast enough connection to handle the packet count, then it is probably faster to let them communicate directly. This also saves on the cost of running expensive relay servers. I think relay servers are mostly useful as a last resort for players who otherwise cannot connect at all, and for players whose internet is too slow for the number of players they need to send to.

Awesomenauts currently does not have relay servers. Instead it solves the problem of two players not being able to connect directly by sending through one of the other players. This often works fine but it is an imperfect solution: it increases the burden on that player's connection. Also, in extremely rare cases a player cannot connect to anyone in the match.

We are currently putting a lot of effort into improving the connection quality for players in Awesomenauts. Relay servers are a feature we are considering for the future, but right now we think we can gain more by improving matchmaking first. We are writing a completely new matchmaking system that will allow us to match players better based on their connection and location. Better matchmaking also brings many other benefits that are unrelated to connection quality. A big recent improvement is that we managed to halve (!) the average bandwidth and packet count used by Awesomenauts. In the long run we will need to research relay servers further to know how beneficial their trade-of of connection quality versus latency would really be.

To summarize I would like to stress that relay servers are not the same as dedicated servers. Relay servers are a tool for reducing bandwidth and packet count and for improving connection quality, potentially at the cost of latency.

Note: I have edited this post on 27-9-2014 to remove references to the Photon network library. It turned out they had added new features that I was not aware of and that made my analysis of what Photon offers incorrect and irrelevant to this post.

Sunday, 14 September 2014

Core network structures for games

When starting to develop an online multiplayer game you need to choose how to structure the netcode. Especially important is the question which computer decides on what part of the gameplay. There are roughly four models in common use in games these days. Today I would like to explain which those are and what their benefits and downsides are.

Here are those four basic structures (of course all kinds of hybrids and variants are possible):

Client-server

In the two versions of client-server there is one computer who is alone responsible for the entire game simulation: the server. The clients cannot make real gameplay decisions. This means that if a player presses a button, it goes to the server, the server executes it and then sends back the results to the client.

This adds significant lag to all input, which is of course totally unacceptable and kills the gameplay feel. To make a game playable with this model all kinds of tricks are needed. The best trick I am aware of is described in this must-read article by Valve. The basic idea is this:

In other words: both the client and the server rewind and then re-simulate whenever a packet is received. Implementing rewinding mechanisms is a complex task and very difficult to add to an existing game. As far as I know this is nevertheless the best and most used approach.

The difference between the two client-server architectures is who the server is. Either it is one of the players, or it is a computer that the game's developer/publisher manages. A dedicated server is usually better, but much more complex and expensive as the developer needs to manage a scalable amount of servers. The fiascos at the launches of Diablo III and Sim City showed how difficult this is to do. The more successful the game, the more difficult dedicated servers are to pull off. They are also simply expensive.

Peer to peer

The third architecture is pure peer to peer. Here no single computer is responsible for the entire game simulation. Instead the simulation is spread out over all of the players. The challenge then is how to divide responsibilities over the players. Awesomenauts uses this model and our distribution of the simulation is simple: each player simulates his own characters and bullets. This has a big benefit: player input can always be handled immediately. No rewinding structure are needed and there is never any input lag for the player. This also makes it much easier to add to an existing game.

Peer to peer has some heavy drawbacks though. The biggest one is that lag becomes much more unpredictable. While in a client server architecture only the lagging player suffers from his own lag, in a peer to peer game the other players will also notice if one player has a bad internet connection.

Peer to peer usually introduces complex synchronisation situations when the simulations of two players are not compatible. A good example of this can be found in my previous blogpost on Awesomenauts' infamous sliding bug. Care needs to be taken to recognise and handle such situations. In most game concepts few of these problems will pop up though: in Awesomenauts pushing other players is the only really complex part regarding conflicting synchronisation.

Another major downside of peer to peer is in the amount of network traffic needed. Since all players need to talk to all other players it requires many more network packets. In client-server only the server needs to talk to everyone, so only one player is affected instead of all of them. Even better for packet count is using dedicated servers: the entire burden falls on servers that the game developer provides.

Deterministic peer to peer lockstep

The fourth and final basic structure is deterministic peer to peer lockstep. This model is mostly used for RTS games. This is also a peer to peer model but here we don't need to worry about which player manages which objects. Instead every client simulates everything in the exact same way. The only thing that needs to be sent over the network is each player's actions. The game runs as lots of really short turns: every step the game collects the commands from all players over the network and then simulates the next step. This is not limited to turn-based games: by doing lots of really short steps it can feel like a real-time game.

Deterministic peer to peer has the enormous benefit that you hardly need to send anything. Only player actions need to be sent. If everyone starts the game in the same situation and runs the exact same steps, then the game will remain in synch without ever sending updates over the network. Therefore this model is highly suitable for RTS games, since they have so many units that synchronising everything is often infeasible. An old but still great article on implementing full determinism is this one: 1500 Archers on a 28.8: Network Programming in Age of Empires and Beyond.

A downside to this model is that it usually adds quite a lot of lag to controls, since actions cannot be executed until all players know about them. Such input lag can be hidden by playing sounds and visual effects immediately when the user clicks. This way the player won't notice that his units don't react immediately.

Note that deterministic lockstep can also be combined with a client/server connection model where the data always flows through the server instead of directly between all players.

Implementing full determinism is incredibly difficult. If any differences exist between the simulations on the clients, then these differences will grow over time and result in the desynchronisation of the game. Lots of tricks need to be used to achieve determinism. For example, floats cannot be used because of rounding errors: all logic needs to be build on integers. Random number generators can only be used if their seeds are synched and they are used in the exact same way. This might for example go wrong if one player runs on a higher graphics quality and thus has extra particles on his screen. Those particles might also use the random number generator and thus desynch it. A simple solution is to use a separate random generator for non-gameplay objects, but this is easy to forget, breaking the entire game.

Getting determinism right is such a challenge that many games that use it add a mechanism to check the correctness of the simulation. They regularly send a checksum of the entire gamestate over the network. Checksums are small so this uses hardly any bandwidth. If the checksums are not the same then the game has desynched. To fix a desynch we could pause the game, send the entire simulation over the network and then continue from there. In older games you might recognise this problem when you got kicked out of a game because of a "synchronisation error".

There are of course many more subtleties to network architecture than I have explained here. All kinds of hybrids are possible and there are many details that I have not mentioned, like vulnerability to cheaters and host migration. I cannot discuss them all today, but I hope this blogpost has given a good summary of the basics. One important topic that really needs to be explained in combination with the above information is relay servers so I will cover that next week.

Here are those four basic structures (of course all kinds of hybrids and variants are possible):

Client-server

In the two versions of client-server there is one computer who is alone responsible for the entire game simulation: the server. The clients cannot make real gameplay decisions. This means that if a player presses a button, it goes to the server, the server executes it and then sends back the results to the client.

This adds significant lag to all input, which is of course totally unacceptable and kills the gameplay feel. To make a game playable with this model all kinds of tricks are needed. The best trick I am aware of is described in this must-read article by Valve. The basic idea is this:

- When the player presses a button, the client immediately processes it as if it has the authority to do so, starting animations and such. A message is also sent to the server.

- The server receives the button press a little bit later, so the server rewinds to the time of the button press, executes it, and then re-simulates to the current time.

- The server then sends the current state to the client

- The client receives the latest state, but in the meanwhile more time has passed. So the client rewinds to the time at which the server sent the message, corrects its own state with what the authoritative server had decided, and then re-simulates locally to the current time.

In other words: both the client and the server rewind and then re-simulate whenever a packet is received. Implementing rewinding mechanisms is a complex task and very difficult to add to an existing game. As far as I know this is nevertheless the best and most used approach.

The difference between the two client-server architectures is who the server is. Either it is one of the players, or it is a computer that the game's developer/publisher manages. A dedicated server is usually better, but much more complex and expensive as the developer needs to manage a scalable amount of servers. The fiascos at the launches of Diablo III and Sim City showed how difficult this is to do. The more successful the game, the more difficult dedicated servers are to pull off. They are also simply expensive.

Peer to peer

The third architecture is pure peer to peer. Here no single computer is responsible for the entire game simulation. Instead the simulation is spread out over all of the players. The challenge then is how to divide responsibilities over the players. Awesomenauts uses this model and our distribution of the simulation is simple: each player simulates his own characters and bullets. This has a big benefit: player input can always be handled immediately. No rewinding structure are needed and there is never any input lag for the player. This also makes it much easier to add to an existing game.

Peer to peer has some heavy drawbacks though. The biggest one is that lag becomes much more unpredictable. While in a client server architecture only the lagging player suffers from his own lag, in a peer to peer game the other players will also notice if one player has a bad internet connection.

Peer to peer usually introduces complex synchronisation situations when the simulations of two players are not compatible. A good example of this can be found in my previous blogpost on Awesomenauts' infamous sliding bug. Care needs to be taken to recognise and handle such situations. In most game concepts few of these problems will pop up though: in Awesomenauts pushing other players is the only really complex part regarding conflicting synchronisation.

Another major downside of peer to peer is in the amount of network traffic needed. Since all players need to talk to all other players it requires many more network packets. In client-server only the server needs to talk to everyone, so only one player is affected instead of all of them. Even better for packet count is using dedicated servers: the entire burden falls on servers that the game developer provides.

Deterministic peer to peer lockstep

The fourth and final basic structure is deterministic peer to peer lockstep. This model is mostly used for RTS games. This is also a peer to peer model but here we don't need to worry about which player manages which objects. Instead every client simulates everything in the exact same way. The only thing that needs to be sent over the network is each player's actions. The game runs as lots of really short turns: every step the game collects the commands from all players over the network and then simulates the next step. This is not limited to turn-based games: by doing lots of really short steps it can feel like a real-time game.

Deterministic peer to peer has the enormous benefit that you hardly need to send anything. Only player actions need to be sent. If everyone starts the game in the same situation and runs the exact same steps, then the game will remain in synch without ever sending updates over the network. Therefore this model is highly suitable for RTS games, since they have so many units that synchronising everything is often infeasible. An old but still great article on implementing full determinism is this one: 1500 Archers on a 28.8: Network Programming in Age of Empires and Beyond.